Coincidence! Just like I did yesterday, Gary Gutting noted the misrepresentation of Mercier and Sperber at The Stone. Reason is primarily social and interpersonal--that's the point, not that reason is primarily for winning, rather than for truth.

***

M&S (see yesterday's post for links) are interested in the function of reason in an evolutionary sense--they want to know which benefit caused reason to exist in the first place. So they're trying to reach way, way back into the mists of time. Part of their case for the argumentative theory is that interpersonal arguing, not solo cogitation, is what reason does best now. Does that make sense? If X does B best now, then is that at least a strong clue that X evolved because it endowed our ancestors with B? I would think so, provided that conditions now are sufficiently like conditions millions of years ago. Conditions could be different. Maybe right now we spend more time yammering, and back then they were men and women of few words. It's kind of hard to imagine cave guys and gals having big debates like we do. We have a lot more time on our hands, what with the division of labor and modern technology and all. So ... I don't know. In any case, I don't think the interest of M&S's article lies entirely in their evolutionary argument. It would be very interesting even if it were just true now that interpersonal arguing is what reason does best. That would support saying that's what reason is for in some loose, but not uninteresting sense.

6/29/11

Reason is for Arguing

An article in the April issue of Brain and Behavioral Sciences has been getting a lot of attention--"Why Do Humans Reason? Arguments for an Argumentative Theory," by Hugo Mercier and Dan Sperber (see first item in this search). This is a summary Mercier gave to Chris Mooney--

Accounts of this article in the media and blogosphere sex it up in an odd way. For example, the article is discussed in a New York Times article under the headline "Reason Seen More as Weapon Than Path to Truth." The author writes:

Mercier has tried to clear up the misconceptions in a New York Times blog post. "We do not claim that reasoning has nothing to do with the truth," he writes, calling this a common misconception. The crucial contrast in the BBS article is not between truth and winning, it's between solitary reasoning and social reasoning.

This is the kind of article that achieves some measure of success just by putting forward a new and dramatically different possibility, even if it doesn't make an airtight case for it. I'm pretty accustomed to thinking about reason as a solitary, Cartesian business. M&S show there's another strong possibility, at the very least. That changes the way you think about many things--in fact, it changes the way you think about your own cognitive efforts.

Interesting upshot for philosophy instructors: if M&S are right, we need to be asking our students to spend more time engaging in carefully structured debates, and doing well-designed group assignments. In fact, the argumentative theory of reason explains something I've observed many times and found perplexing--the student who seems very capable when sparring in class, but not very capable in solo written work. If M&S are right, this is not anomalous at all. It's no more surprising than people being more agile on land than in water. Well of course--our physiology evolved that way.

The article's complicated and the follow-up commentaries are extensive--so this is a lot to chew on, but worth the effort, I think.

p.s. Aha--a short-cut. Here's a nice site put up by Mercier, with links to online discussion of the argumentative theory of reasoning. And information about more of Mercier's projects.

p.p.s. Another aha. Gary Gutting notes the way Mercier and Sperber have been misrepresented in a column at The Stone. Not truth vs. winning, but solo vs. social!

Current philosophy and psychology are dominated by what can be called a classical, or ‘Cartesian’ view of reasoning. Even though this view goes back at least to some classical Greek philosophers, its most famous exposition is probably in Descartes. Put plainly, it’s the idea that the role of reasoning is to critically examine our beliefs so as to discard wrong-headed ones and thus create more reliable beliefs—knowledge. This knowledge is in turn supposed to help us make better decisions. This view is—we surmise—hard to reconcile with a wealth of evidence amassed by modern psychology. Tversky and Kahneman (and many others) have shown how fallible reasoning can be. Epstein (again, and many others) has shown that sometimes reasoning is unable to correct even the most blatantly incorrect intuitions. Others have shown that sometimes reasoning too much can make us worse off: it can unduly increase self-confidence, allow us to maintain erroneous beliefs, creates distorted, polarized beliefs and enables us to violate our own moral intuitions by finding excuses for ourselves.

We claim that the full import of these results has not been properly gauged since most people still seem to agree, or at least fail to question, the classical, Cartesian assumptions. Our theory—the argumentative theory of reasoning—suggests that instead of having a purely individual function, reasoning has a social and, more specifically, argumentative function. The function of reasoning would be to find and evaluate reasons in dialogic contexts—more plainly, to argue with others. Here’s a very quick summary of the evolutionary rationale behind this theory.

Communication is hugely important for humans, and there is good reason to believe that this has been the case throughout our evolution, as different types of collaborative—and therefore communicative—activities already played a big role in our ancestors’ lives (hunting, collecting, raising children, etc.). However, for communication to be possible, listeners have to have ways to discriminate reliable, trustworthy information from potentially dangerous information—otherwise speakers would be wont to abuse them through lies and deception. One way listeners and speakers can improve the reliability of communication is through arguments. The speaker gives a reason to accept a given conclusion. The listener can then evaluate this reason to decide whether she should accept the conclusion. In both cases, they will have used reasoning—to find and evaluate a reason respectively. If reasoning does its job properly, communication has been improved: a true conclusion is more likely to be supported by good arguments, and therefore accepted, thereby making both the speaker—who managed to convince the listener—and the listener—who acquired a potentially valuable piece of information—better off.Mercier continues by looking at the negative side of reason being for interpersonal arguing, and particularly at the confirmation bias. Solo reasoning has its problems, and so does social reasoning.

Accounts of this article in the media and blogosphere sex it up in an odd way. For example, the article is discussed in a New York Times article under the headline "Reason Seen More as Weapon Than Path to Truth." The author writes:

For centuries thinkers have assumed that the uniquely human capacity for reasoning has existed to let people reach beyond mere perception and reflex in the search for truth. Rationality allowed a solitary thinker to blaze a path to philosophical, moral and scientific enlightenment.

Now some researchers are suggesting that reason evolved for a completely different purpose: to win arguments.Echoing the Times headline, Mooney calls his post "Is Reasoning Built for Winning Arguments, Rather than Finding Truth?" And yesterday Massimo Pigluicci wrote a post saying "Mercier and Sperber’s basic argument is that reason did not evolve to allow us to seek truth, but rather to win arguments with our fellow human beings."

Mercier has tried to clear up the misconceptions in a New York Times blog post. "We do not claim that reasoning has nothing to do with the truth," he writes, calling this a common misconception. The crucial contrast in the BBS article is not between truth and winning, it's between solitary reasoning and social reasoning.

This is the kind of article that achieves some measure of success just by putting forward a new and dramatically different possibility, even if it doesn't make an airtight case for it. I'm pretty accustomed to thinking about reason as a solitary, Cartesian business. M&S show there's another strong possibility, at the very least. That changes the way you think about many things--in fact, it changes the way you think about your own cognitive efforts.

Interesting upshot for philosophy instructors: if M&S are right, we need to be asking our students to spend more time engaging in carefully structured debates, and doing well-designed group assignments. In fact, the argumentative theory of reason explains something I've observed many times and found perplexing--the student who seems very capable when sparring in class, but not very capable in solo written work. If M&S are right, this is not anomalous at all. It's no more surprising than people being more agile on land than in water. Well of course--our physiology evolved that way.

The article's complicated and the follow-up commentaries are extensive--so this is a lot to chew on, but worth the effort, I think.

p.s. Aha--a short-cut. Here's a nice site put up by Mercier, with links to online discussion of the argumentative theory of reasoning. And information about more of Mercier's projects.

p.p.s. Another aha. Gary Gutting notes the way Mercier and Sperber have been misrepresented in a column at The Stone. Not truth vs. winning, but solo vs. social!

6/28/11

Is there a duty to adopt?

Back on the front page, since there's more discussion today--

Occasionally I've heard people write/say that there's a duty to adopt children, rather than procreate. I take it this is supposed to be a duty just for people who want children. Considering that there are existing children in need of parents, and considering that the world is already overpopulated, it's wrong to make new children--or so they say.

To think about this, I think it's going to help to go a bit hypothetical, because the real world situation is too complex, and some of the facts are in dispute. For example, the point about over-population is disputable. The total world population is too great, but some regional birth-rates are too low. People aren't replacing themselves, making for long-term problems with funding social programs for the elderly. The other complexity is that although there are lots of children in institutions--Scott Simon says there are a million in Chinese orphanages--there are quotas and red-tape. So there's a limit to how many people can actually fulfill their desire to be parents by adopting.

So here's the hypothetical situation. Pretend there's no population problem, and pretend there are plenty of children in need of adoption. In fact, there's an orphanage in your neighborhood. You want to be a parent. Must you adopt, rather than create a new child in that situation?

My vote is No. The main reason is because I think being a parent to a child is having a very intimate relationship with that child, and I am reluctant to think intimacy can be morally obligatory. Imagine the issue is not adopting children but "going out". Dave, the guy you like, has lots of brains and beauty, and a bubbly personality too! So if you drop Dave, he'll be fine. Dudley, on the other hand, has no brains or beauty, and he's very dull. You're his only chance. Must you go out with Dudley? No, of course not. Even if doing so would maximize utility, as the utilitarians say, you may go out with Dave.

When I subjected my husband and son to this argument, they viciously attacked me, pointing out that children are in need of adoptive parents in a much more serious way than Dudley is in need of a date. Yes, yes, yes, of course. But the point is that it is odd to think of any intimate behavior as morally obligatory. I grant that kids need a home much more than Dudley needs a kiss, but intimacies are involved in both cases, and I can't imagine intimacies being obligatory except in the most dire situation.

Now, you may say--what's so intimate about parenthood? But I hope not, because a lot is intimate about parenthood. And the intimacy of it is probably crucial--it's closely connected to the commitment people feel to their kids. It can't be my duty to enter into that intimate relationship with just any child, based on the child's need. In fact, I just may not be able to feel the right things for any child but my own. This may be narcissistic of me, but so be it--that might be the fact of the matter.

All that being said, I can imagine obligatory intimacies in very, very dire situations. The end of the world is nigh; you and Dudley are among the last remaining men and women. You don't care for Dudley, but then, there will be no more people if you don't have a "date." (Dave, as it turns out, was rendered infertile by the catastrophe that wiped out most of the human race.) Or: in that very dire situation, an abandoned child needs you (and there's no one around but you). Don't you have to become his parent?

So: "no obligatory intimacies" is not an absolute rule, but it does seem at least roughly, and in ordinary situations, correct.

Occasionally I've heard people write/say that there's a duty to adopt children, rather than procreate. I take it this is supposed to be a duty just for people who want children. Considering that there are existing children in need of parents, and considering that the world is already overpopulated, it's wrong to make new children--or so they say.

To think about this, I think it's going to help to go a bit hypothetical, because the real world situation is too complex, and some of the facts are in dispute. For example, the point about over-population is disputable. The total world population is too great, but some regional birth-rates are too low. People aren't replacing themselves, making for long-term problems with funding social programs for the elderly. The other complexity is that although there are lots of children in institutions--Scott Simon says there are a million in Chinese orphanages--there are quotas and red-tape. So there's a limit to how many people can actually fulfill their desire to be parents by adopting.

So here's the hypothetical situation. Pretend there's no population problem, and pretend there are plenty of children in need of adoption. In fact, there's an orphanage in your neighborhood. You want to be a parent. Must you adopt, rather than create a new child in that situation?

My vote is No. The main reason is because I think being a parent to a child is having a very intimate relationship with that child, and I am reluctant to think intimacy can be morally obligatory. Imagine the issue is not adopting children but "going out". Dave, the guy you like, has lots of brains and beauty, and a bubbly personality too! So if you drop Dave, he'll be fine. Dudley, on the other hand, has no brains or beauty, and he's very dull. You're his only chance. Must you go out with Dudley? No, of course not. Even if doing so would maximize utility, as the utilitarians say, you may go out with Dave.

When I subjected my husband and son to this argument, they viciously attacked me, pointing out that children are in need of adoptive parents in a much more serious way than Dudley is in need of a date. Yes, yes, yes, of course. But the point is that it is odd to think of any intimate behavior as morally obligatory. I grant that kids need a home much more than Dudley needs a kiss, but intimacies are involved in both cases, and I can't imagine intimacies being obligatory except in the most dire situation.

Now, you may say--what's so intimate about parenthood? But I hope not, because a lot is intimate about parenthood. And the intimacy of it is probably crucial--it's closely connected to the commitment people feel to their kids. It can't be my duty to enter into that intimate relationship with just any child, based on the child's need. In fact, I just may not be able to feel the right things for any child but my own. This may be narcissistic of me, but so be it--that might be the fact of the matter.

All that being said, I can imagine obligatory intimacies in very, very dire situations. The end of the world is nigh; you and Dudley are among the last remaining men and women. You don't care for Dudley, but then, there will be no more people if you don't have a "date." (Dave, as it turns out, was rendered infertile by the catastrophe that wiped out most of the human race.) Or: in that very dire situation, an abandoned child needs you (and there's no one around but you). Don't you have to become his parent?

So: "no obligatory intimacies" is not an absolute rule, but it does seem at least roughly, and in ordinary situations, correct.

6/26/11

Miss USA Discusses Evolution

You may find this amusing....or not.

Why do so many of these women say both evolution and the biblical creation story should be taught in schools? What's causing them to give the wrong answer? Probably just a desire to please, in many cases, but those who genuinely take this position need to be diagnosed. What's their problem?

#1 They don't understand the first amendment to the US constitution. Religion clearly can't be taught in public schools.

You might also add...

#2 They don't understand that the creation story is false, so it shouldn't be taught as a rival of evolution, which is true.

Then again, instead of adding #2, you might say--

#3 They think of evolution and the biblical creation story as being in competition, like the pro-choice and pro-life positions on abortion, which misleads them into thinking it's only fair for schools to teach both. The creation story is false, but it's not even in the truth business--it's not that kind of thing.

I think #3 is what ultra-liberal religious people believe. They think the creation story--the first chapter of Genesis--is a poetic vision of the world. The really, really important part of the vision is the repeated phrase "and God saw that it was good." The message is about the value of this world, not at all about how the universe and the various species came to be.

If you see the creation story that way, it's no more in competition with evolution than poetry about the civil war is in competition with a history book about the civil war. There's no debate between creation and evolution, no reason to cover both in a biology class.

But should biblical creation be kept out of school altogether? An ultra-liberal religious Miss USA contestant ought to smile wildly, gesticulate gracefully, and say something like this--

Why do so many of these women say both evolution and the biblical creation story should be taught in schools? What's causing them to give the wrong answer? Probably just a desire to please, in many cases, but those who genuinely take this position need to be diagnosed. What's their problem?

#1 They don't understand the first amendment to the US constitution. Religion clearly can't be taught in public schools.

You might also add...

#2 They don't understand that the creation story is false, so it shouldn't be taught as a rival of evolution, which is true.

Then again, instead of adding #2, you might say--

#3 They think of evolution and the biblical creation story as being in competition, like the pro-choice and pro-life positions on abortion, which misleads them into thinking it's only fair for schools to teach both. The creation story is false, but it's not even in the truth business--it's not that kind of thing.

I think #3 is what ultra-liberal religious people believe. They think the creation story--the first chapter of Genesis--is a poetic vision of the world. The really, really important part of the vision is the repeated phrase "and God saw that it was good." The message is about the value of this world, not at all about how the universe and the various species came to be.

If you see the creation story that way, it's no more in competition with evolution than poetry about the civil war is in competition with a history book about the civil war. There's no debate between creation and evolution, no reason to cover both in a biology class.

But should biblical creation be kept out of school altogether? An ultra-liberal religious Miss USA contestant ought to smile wildly, gesticulate gracefully, and say something like this--

It's fine if it's in a book of poetry or mythology, but because of the first amendment, it can't be taught in the way it's taught in a religious setting. In that setting the creation story is taught as religion, which means, not necessarily as scientific or historical truth, but as something students are expected to cleave to as part of 'who they are' (as Jews, or Episcopalians, or whatever). So--evolution should be taught in science class, and the creation story possibly in some other class, but not as religion.Next up: the swimsuit competition.

6/20/11

The Nomama Rule

A little birdie told me that some people think it's highly suspicious when moms come to the defense of their kids on facebook. As in: suppose you're an author, and PZ Myers is beating you to a pulp, and you post a quick update to that effect, and your mom posts a supportive comment, but no different from what your pals are also saying. Is the fact that she's your mom a problem? Being a mom with kids on facebook, I'd like to know... fast! Before I make any hideous mistakes.

So I asked my two 14-year-old kids about it. They pondered the issue for roughly half a second, and then made it clear I was never to write supportive comments, though I'm certainly fb friends of theirs and comment extremely briefly once in a blue moon. Obviously, these fledglings need to have it be clear to their fb friends that they can fly without any support. But then, the author in the example is not a teenager. He's an adult, and no doubt pretty secure in his ability to fly independently. He clearly hasn't told his mother not to comment. So...

So if Mom's comments are not a problem for our author, could they still legitimately be a problem for other readers--that is, other fb friends reading, since only fb friends can read? But what (on earth) would be the problem? A mom's biased? Well of course, but nobody runs their fb page like it was an impartial jury! Your girlfriend or boyfriend may comment, as can your circle of real-life loyal friends, your long-time ideological comrades, you fans, or what have you. So also, of course, if you're confident and secure, can your mom.

On facebook people use real names, but out in the blogosphere, how many moms comment under pseudonyms? My guess is--over half of bloggers get comments from their moms, using non-obvious momonyms*. Relax everyone ... it's okay! Actually, it's more than okay. Especially when a mom is supporting her atheist, gay son (as in the case at hand). That's not just okay, it's hurray!

p.s. I had to invent a whole new category for this post.

* As in, not "Mom" spelled backwards.

So I asked my two 14-year-old kids about it. They pondered the issue for roughly half a second, and then made it clear I was never to write supportive comments, though I'm certainly fb friends of theirs and comment extremely briefly once in a blue moon. Obviously, these fledglings need to have it be clear to their fb friends that they can fly without any support. But then, the author in the example is not a teenager. He's an adult, and no doubt pretty secure in his ability to fly independently. He clearly hasn't told his mother not to comment. So...

So if Mom's comments are not a problem for our author, could they still legitimately be a problem for other readers--that is, other fb friends reading, since only fb friends can read? But what (on earth) would be the problem? A mom's biased? Well of course, but nobody runs their fb page like it was an impartial jury! Your girlfriend or boyfriend may comment, as can your circle of real-life loyal friends, your long-time ideological comrades, you fans, or what have you. So also, of course, if you're confident and secure, can your mom.

On facebook people use real names, but out in the blogosphere, how many moms comment under pseudonyms? My guess is--over half of bloggers get comments from their moms, using non-obvious momonyms*. Relax everyone ... it's okay! Actually, it's more than okay. Especially when a mom is supporting her atheist, gay son (as in the case at hand). That's not just okay, it's hurray!

p.s. I had to invent a whole new category for this post.

* As in, not "Mom" spelled backwards.

6/19/11

"The balance of pleasure minus pain"

Hedonistic utilitarians say pleasure is the good. I spent roughly two hours enjoying a movie yesterday. The good in that situation, they say, is the sum of the pleasure I experienced, minute by minute, over those two hours. Of course, there is another dimension of experience--pain. Hedonists think pain is bad in a way commensurate with the way pleasure is good. The speaker system in the movie theater had a problem, causing me moments of displeasure throughout the movie. Add the emotional pain up, subtract from total pleasure, and you get a balance. 100 - 25 = 75, let's say. 75 represents the good I got out of seeing the movie.

Except this seems all wrong. It's just not true that there's any equivalence between a mixed experience (100 - 25 = 75) and a shorter purely pleasurable experience (75 - 0 = 75). It would be perfectly reasonable for someone to prefer one over the other. The whole idea that pain and pleasure neatly sum, like bank deposits and debits, is one of those things that seems reasonable only if you never think about it. Rather than there being a sum, it seems to me there are just two dimensions, good and bad, and various ways they can relate to each other. Sometimes a person will think the bad is worth it for the good, and sometimes not, but this is not something you can determine by a simple calculation. It's not simply a question of whether the balance is positive or negative.

Here's a mixed experience of recent memory, where the judgment could be "worth it" or "not worth it," and the balance does not decide the matter. On Mother's Day our family went to a sculpture museum, and my 14 year old son dropped his ipod touch on a concrete floor. The screen shattered so badly you could see the innards of the device--shocking! He was not a happy boy. An hour later we were driving home past an Apple store. I suggested we go in and ask whether the device could be fixed. We talked to one of the geniuses at the genius bar (the Apple people are geniuses at naming things), and he said the thing wasn't fixable, but my son could have a new ipod for half price. We said thank you and we'd think about it, but then the guy turned the ipod on and saw my son's wallpaper, which was a photo of a bumpersticker that said "The road to hell is paved with Republicans." He told my son he liked the wallpaper so much he'd give him a new ipod touch for free--which he did. Now he was a very happy boy.

On the way home I asked him what he thought about this whole one hour period of his life. Was the misery worth it for the happiness, or not? I don't think this was a simple question of making a mathematical calculation. He wasn't thinking about whether he was now in the red or in the black. The judgment was a primitive "worth it" judgment--was it worth going through the first 59 minutes of that hour to get to the last, thrilling minute?

Answer (which surprised me): no. But it could have been yes, consistent with all the facts about quantities of pain and pleasure.

Except this seems all wrong. It's just not true that there's any equivalence between a mixed experience (100 - 25 = 75) and a shorter purely pleasurable experience (75 - 0 = 75). It would be perfectly reasonable for someone to prefer one over the other. The whole idea that pain and pleasure neatly sum, like bank deposits and debits, is one of those things that seems reasonable only if you never think about it. Rather than there being a sum, it seems to me there are just two dimensions, good and bad, and various ways they can relate to each other. Sometimes a person will think the bad is worth it for the good, and sometimes not, but this is not something you can determine by a simple calculation. It's not simply a question of whether the balance is positive or negative.

Here's a mixed experience of recent memory, where the judgment could be "worth it" or "not worth it," and the balance does not decide the matter. On Mother's Day our family went to a sculpture museum, and my 14 year old son dropped his ipod touch on a concrete floor. The screen shattered so badly you could see the innards of the device--shocking! He was not a happy boy. An hour later we were driving home past an Apple store. I suggested we go in and ask whether the device could be fixed. We talked to one of the geniuses at the genius bar (the Apple people are geniuses at naming things), and he said the thing wasn't fixable, but my son could have a new ipod for half price. We said thank you and we'd think about it, but then the guy turned the ipod on and saw my son's wallpaper, which was a photo of a bumpersticker that said "The road to hell is paved with Republicans." He told my son he liked the wallpaper so much he'd give him a new ipod touch for free--which he did. Now he was a very happy boy.

On the way home I asked him what he thought about this whole one hour period of his life. Was the misery worth it for the happiness, or not? I don't think this was a simple question of making a mathematical calculation. He wasn't thinking about whether he was now in the red or in the black. The judgment was a primitive "worth it" judgment--was it worth going through the first 59 minutes of that hour to get to the last, thrilling minute?

Answer (which surprised me): no. But it could have been yes, consistent with all the facts about quantities of pain and pleasure.

6/18/11

Should Atheists do Interfaith Work?

I'm late to the party--here's Chris Stedman saying yes, here's Josh Rosenau strengthening the case and Joe Hoffman saying maybe not, while others hate the whole idea (see the Stedman post for details).

The idea that atheists could be involved in interfaith work dawned on me a couple of years ago when I attended an interfaith panel on the crisis in Darfur. [Now I remember ... I wrote about this before!] The panel included a rabbi, a college chaplain and an imam. I was there as chair of a large Darfur initiative at the rabbi's synagogue. I found myself looking at things through two sets of eyes. I was proud of the rabbi. He had visited Darfuri refugee camps himself, and our initiative was nationally supported and very effective at raising money and awareness (Nicholas Kristof mentioned it in a 2005 column). On the other hand, it seemed to me the panel should have included someone with a non-theistic perspective. This couldn't just be an ethicist, or foreign affairs expert, or what not--this was a panel assembled to represent diverse religious perspectives. But why not an atheist or a secular humanist?

Some atheists really don't like the idea. The main objection seems to be that if atheists get involved with "inter-faith" work, that will send the message that atheism is based on faith. That, I think, is a superficial objection. In fact, religious people could have the same worry. Many believers believe because they think they have a sound argument to support their belief-- they don't believe "on faith." So "faith" is already being used loosely here. An inter-faith panel is really just a panel composed of members of different religious communities--faith, schmaith. So the real question is: are atheists members of religious communities?

Some are, some aren't. Just being an atheist doesn't really make you a member of such a community. But some atheists do organize themselves into groups in a religion-like way. In fact, I spoke at such a group on a recent Sunday morning. There was inspiring music, there were nice, warm messages, but it was all 100% godless. (And then there was my dark little talk about whether people ought to exist...!) I can easily imagine someone leading a "freethinker" initiative on Darfur, and thus having a basis for being included on that panel alongside the rabbi, the chaplain, and the imam.

What's left, then, as an objection to atheists doing interfaith work? There's this--and for some, I think it's the main thing: being on such a panel forces people into a posture of mutual respect. A foaming-at-the-mouth anti-Jewish imam isn't going to be invited, nor is a rabbi who despises Christianity, or a Christian who's constantly voicing contempt for non-Christians. To get on a panel like this, a freethinker has got to reject religious belief nicely, in just the "I respect you, but..." way that Jews, Christians, and Muslims disagree with each other. To the extent that the most visible sort of atheism today is less respectful than that, the interfaith-suitable freethinker is going to have to be different. And overtly different too--they're going to have to reassure religious leaders that they are "like this, not like that." That's the price of inclusion--and I think inclusion on that Darfur panel would have been desirable.

The idea that atheists could be involved in interfaith work dawned on me a couple of years ago when I attended an interfaith panel on the crisis in Darfur. [Now I remember ... I wrote about this before!] The panel included a rabbi, a college chaplain and an imam. I was there as chair of a large Darfur initiative at the rabbi's synagogue. I found myself looking at things through two sets of eyes. I was proud of the rabbi. He had visited Darfuri refugee camps himself, and our initiative was nationally supported and very effective at raising money and awareness (Nicholas Kristof mentioned it in a 2005 column). On the other hand, it seemed to me the panel should have included someone with a non-theistic perspective. This couldn't just be an ethicist, or foreign affairs expert, or what not--this was a panel assembled to represent diverse religious perspectives. But why not an atheist or a secular humanist?

Some atheists really don't like the idea. The main objection seems to be that if atheists get involved with "inter-faith" work, that will send the message that atheism is based on faith. That, I think, is a superficial objection. In fact, religious people could have the same worry. Many believers believe because they think they have a sound argument to support their belief-- they don't believe "on faith." So "faith" is already being used loosely here. An inter-faith panel is really just a panel composed of members of different religious communities--faith, schmaith. So the real question is: are atheists members of religious communities?

Some are, some aren't. Just being an atheist doesn't really make you a member of such a community. But some atheists do organize themselves into groups in a religion-like way. In fact, I spoke at such a group on a recent Sunday morning. There was inspiring music, there were nice, warm messages, but it was all 100% godless. (And then there was my dark little talk about whether people ought to exist...!) I can easily imagine someone leading a "freethinker" initiative on Darfur, and thus having a basis for being included on that panel alongside the rabbi, the chaplain, and the imam.

What's left, then, as an objection to atheists doing interfaith work? There's this--and for some, I think it's the main thing: being on such a panel forces people into a posture of mutual respect. A foaming-at-the-mouth anti-Jewish imam isn't going to be invited, nor is a rabbi who despises Christianity, or a Christian who's constantly voicing contempt for non-Christians. To get on a panel like this, a freethinker has got to reject religious belief nicely, in just the "I respect you, but..." way that Jews, Christians, and Muslims disagree with each other. To the extent that the most visible sort of atheism today is less respectful than that, the interfaith-suitable freethinker is going to have to be different. And overtly different too--they're going to have to reassure religious leaders that they are "like this, not like that." That's the price of inclusion--and I think inclusion on that Darfur panel would have been desirable.

6/16/11

Weiner Resigns

I must say, I think it's a pity. Weiner made a total fool out of himself, but he's not in the same class as moral morons like Newt Ginrgrich and John Edwards. His biggest liability is that he looked so silly, with all his private pictures on public display, but what he did was small potatoes, comparatively speaking. Important to his wife, no doubt, but not of great importance to his constituents. To my mind, he was the latest Bill Clinton, another person who grossly embarrassed himself, but whose mistakes had little public importance. I'm glad Clinton overcame the public humiliation and figured Weiner could have survived too. But no, not for now.

The Empty Universe

I've been reading Peter Singer's 3rd edition of Practical Ethics, trying to see what's new. One new thing is the discussion in chapter 5 (see here) about what we ought to be aiming for--a Peopled Universe, a Non-sentient Universe (a la David Benatar), or a Happy Sheep Universe, crammed with the maximum amount of happy life. He picks the Peopled Universe, based partly on premises from 2e, but also on ideas new to 3e.

The old ideas: (1) The "total view", rather than the "prior existence view." We must take into account the total result of our choices, not limit our focus to the impact on those who exist prior to, or apart from, our decisions. Both views lead to problems, but the second to worse problems. (2) Preference utilitarianism, not hedonistic utilitarianism. The good is desire-satisfaction, not simply happiness. People have vastly more desires than sheep, so potentially more satisfied desires, so potentially more good in their lives.

Take the total view plus preference utilitarianism together. Which universe should we aim for, based on that package? It depends how we evaluate preference satisfaction. We could take every chunk of preference satisfaction as a positive good. In that case, the Peopled Universe is best, because there's the most preference satisfaction in it. There's none in the empty universe, and less in the Happy Sheep Universe, because of the more limited potential of sheep to have preferences.

But that way of evaluating preference satisfaction is problematic. If I make you have a burning desire to eat marshmallows, through some devious chicanery or other, do we really want to say it's positively good if you get to have lots of marshmallows? An alternative is the debit view of preferences. We take an unsatisfied preference as a debit in a sort of ledger. When the preference is satisfied, the debit is erased. Making you want marshmallows is actually bad, but I can cancel it out by feeding you marshmallows.

With this adjustment, we now get a different ranking of the three worlds. The Non-Sentient Universe comes in as #1, since the others have uncanceled debits. This sits unwell with Singer (and with me, too). There are other ways you could look at preference satisfaction, having to do with the way preferences are generated, but Singer makes a much bigger move away from 2e. He suggests (p. 117) that what has value is not just preference-satisfaction--

The old ideas: (1) The "total view", rather than the "prior existence view." We must take into account the total result of our choices, not limit our focus to the impact on those who exist prior to, or apart from, our decisions. Both views lead to problems, but the second to worse problems. (2) Preference utilitarianism, not hedonistic utilitarianism. The good is desire-satisfaction, not simply happiness. People have vastly more desires than sheep, so potentially more satisfied desires, so potentially more good in their lives.

Take the total view plus preference utilitarianism together. Which universe should we aim for, based on that package? It depends how we evaluate preference satisfaction. We could take every chunk of preference satisfaction as a positive good. In that case, the Peopled Universe is best, because there's the most preference satisfaction in it. There's none in the empty universe, and less in the Happy Sheep Universe, because of the more limited potential of sheep to have preferences.

But that way of evaluating preference satisfaction is problematic. If I make you have a burning desire to eat marshmallows, through some devious chicanery or other, do we really want to say it's positively good if you get to have lots of marshmallows? An alternative is the debit view of preferences. We take an unsatisfied preference as a debit in a sort of ledger. When the preference is satisfied, the debit is erased. Making you want marshmallows is actually bad, but I can cancel it out by feeding you marshmallows.

With this adjustment, we now get a different ranking of the three worlds. The Non-Sentient Universe comes in as #1, since the others have uncanceled debits. This sits unwell with Singer (and with me, too). There are other ways you could look at preference satisfaction, having to do with the way preferences are generated, but Singer makes a much bigger move away from 2e. He suggests (p. 117) that what has value is not just preference-satisfaction--

We could try to distinguish two kinds of value: preference dependent value, which depends on the existence of beings with preferences and is tied to the preferences of those specific beings, and value that is independent of preferences. When we say that the Peopled Universe is better than the nonsentient Universe, we are referring to value that is independent of preferences.Like what? What, besides a satisfied preference, might have value?

We could hold a pluralist view of value and consider that love, friendship, knowledge and the appreciation of beauty, as well as pleasure or happiness, are all of value.The Peopled Universe has more of those positive goods than the Non-Sentient or Happy Sheep universes, so it's best. Singer's tone in these passages is exploratory and respectful of the difficulty of the issues and the diversity of positions. Benatar's preference for the empty universe gets a respectful nod. But he seems willing to complicate his own ethical theory in order to avoid the ultra-gloomy view that the best world is the most barren.

6/11/11

The Google Guitar

Going through withdrawal because it's gone? Never fear, you can still find it here. Coolest Google logo ever!

6/10/11

The Red Market

NPR had an amazing and very disturbing segment tonight on The Red Market, by Scott Carney, which sounds like a must read.

Moderating is probably going to be slow for the next couple of days. Don't think I've ditched a comment just because it's slow to appear.

Moderating is probably going to be slow for the next couple of days. Don't think I've ditched a comment just because it's slow to appear.

6/9/11

Have More Kids!

Planning on having one kid? Why not two? If two, then consider three. That's the main message of this book, which you might consider unpromising, but everything along the way is interesting, and also helpful to new parents--whether they're thinking about "how many?" or not.

Economist Bryan Caplan's case for more kids--

(1) Kids make us happy, especially if we take into account the long term. Two might be ideal when they're very young and a lot of work, but six would be great when kids are grown up and no trouble The rational consumer will average the two numbers and have four...or some such.

(2) You think kids don't really make us happy? Well, the evidence for that is overstated, and to the extent they don't, it's because we make child rearing much too hard. Ease up, use the TV as a babysitter when you have to, cancel the ballet lessons, don't fret about "stranger danger"--don't worry, be happy.

(3) Think (2) is rubbish because easing up will ruin the kids? Not at all. There's a massive amount of research that shows that parents have little influence on what their kids will be like when they grow up. You can mold them as kids, but they'll bounce back as adults. (He has a very long summary of twin and adoption studies to back this up.) Exceptions: you can influence your kids' political and religious affiliations and how they think of you as a parent.

(4) Not enough kids are being born now to support the elderly. Two people who have lots of kids are doing more to support the elderly, since each kid will grow up and pay into the social security system, yet the couple will eventually receive no more assistance in old age than any other. So we should see people with lots of kids as altruists, not as irresponsible resource-depleters.

(5) Lots of people means lots of ideas and innovations--we are better off in a world with 6 billion, 7 billion, 8 billion ... people.

(6) Yes, environmental impact is something to worry about, but we should home in on a solution to that problem, not throw the baby out with the bathwater.

(7) Procreation is good for kids, because life is good. People should have total procreative liberty--it's fine to use genetic screening, testing, or engineering to create whatever children we'd prefer. Sex selection is fine, making taller, smarter kids is fine, even cloning is fine. After all, none of these things is going to yield children who are sorry they were born. That's the critical question.

Even if you find yourself disagreeing with much of this (I think he doesn't take population and environment issues seriously enough) Caplan will change your mind about a fair number of things, and he's entertaining and amiable in the process.

Economist Bryan Caplan's case for more kids--

(1) Kids make us happy, especially if we take into account the long term. Two might be ideal when they're very young and a lot of work, but six would be great when kids are grown up and no trouble The rational consumer will average the two numbers and have four...or some such.

(2) You think kids don't really make us happy? Well, the evidence for that is overstated, and to the extent they don't, it's because we make child rearing much too hard. Ease up, use the TV as a babysitter when you have to, cancel the ballet lessons, don't fret about "stranger danger"--don't worry, be happy.

(3) Think (2) is rubbish because easing up will ruin the kids? Not at all. There's a massive amount of research that shows that parents have little influence on what their kids will be like when they grow up. You can mold them as kids, but they'll bounce back as adults. (He has a very long summary of twin and adoption studies to back this up.) Exceptions: you can influence your kids' political and religious affiliations and how they think of you as a parent.

(4) Not enough kids are being born now to support the elderly. Two people who have lots of kids are doing more to support the elderly, since each kid will grow up and pay into the social security system, yet the couple will eventually receive no more assistance in old age than any other. So we should see people with lots of kids as altruists, not as irresponsible resource-depleters.

(5) Lots of people means lots of ideas and innovations--we are better off in a world with 6 billion, 7 billion, 8 billion ... people.

(6) Yes, environmental impact is something to worry about, but we should home in on a solution to that problem, not throw the baby out with the bathwater.

(7) Procreation is good for kids, because life is good. People should have total procreative liberty--it's fine to use genetic screening, testing, or engineering to create whatever children we'd prefer. Sex selection is fine, making taller, smarter kids is fine, even cloning is fine. After all, none of these things is going to yield children who are sorry they were born. That's the critical question.

Even if you find yourself disagreeing with much of this (I think he doesn't take population and environment issues seriously enough) Caplan will change your mind about a fair number of things, and he's entertaining and amiable in the process.

6/7/11

I Was a Teenage "Gnu" Atheist

I was cleaning out a closet over the weekend and found something that amused me--an English composition book from when I was 14 years old (same age my two kids are right now). I wrote essays about the Kent state shootings, the generation gap, drugs, and a few lighter topics. Plus, there was an essay trashing the pledge of allegiance. Why should John, if he's black, pledge faithfulness to a country that "may be prejudiced against him," I ask. I also complain about the god part. "John may not believe in god," I write, "but the pledge that is meant for everyone in the United States says that our country has a god." Note how I militantly refuse to capitalize! (The teacher corrected me.) In fact, when I was that age, I either skipped the "god" part, or didn't say the pledge at all. In my no pledge phase, I got harassed in the halls. The harassers called me a "kike"--my being Jewish was more visible than my being an atheist. Not literally visible, but there were about three Jewish kids in each grade, and everyone knew who they were.

A funny thing--my kids tell me they don't say the god part of the pledge, but now, when I attend a school function, I do. I simply take it less seriously than I did at age 14. It's like the bit in "America the Beautiful" about amber waves of grain and purple mountains' majesty. Just part of the tapestry. But that's fine--there's a time for being literal and rebellious.

6/6/11

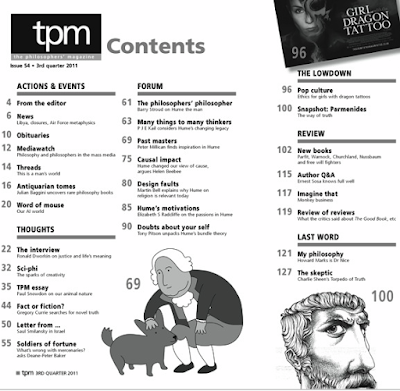

New Philosophers' Magazine

|

| click to enlarge |

The new issue of The Philosophers' Magazine is full of good stuff--see above. In the reviews section, which I've started editing, we've got Neil Levy on Patricia Churchland (Braintrust), Thom Brooks on Martha Nussbaum (Creating Capabilities), Jussi Suikkanen on Derek Parfit (On What Matters), Scott Aikin and Robert Talisse on Mary Warnock (Dishonest to God), Troy Jollimore on Hubert Dreyfus and Sean Kelly (All Things Shining), and "at the movies," Dana Nelkin and Sam Rickless on The Adjustment Bureau. My column is about The Evolution of Bruno Littlemore, a novel by Benjamin Hale. Seriously weird, totally great. More about the magazine and how to subscribe is here.

6/5/11

Is Worship Morally Wrong?

Scott Aikin and Robert Talisse try to make a "moral" case for atheism in their new book Reasonable Atheism: A Moral Case for Respectful Disbelief. In other words, they generate the conclusion that there is no god from assumptions about what's moral and immoral. Part of this is the familiar argument from evil, but less familiarly, they make an argument from worship. It's quite simple--

(1), they say, is true by definition. God (on the sort of theism they're examining) is all-knowing, all-good, and all-powerful and something humans are obligated to worship.

(2) is a moral claim which they defend by making worship out to be something tremendously self-abnegating, and so inconsistent with human dignity.

And yet, and yet. I think Aikin and Talisse do raise an interesting question. Namely: what is this thing called "worship" and how could it be good, not bad? Even if worshiping God is not a question of constant servility, how could it be self-respecting to prostrate yourself even just by bowing your head at a religious service? Isn't even that a bit servile--so a bit bad?

Then again, is "worship" even a necessary term? Perhaps the state of mind in a church or synagogue can be reverence--a much less submissive thing than worship. One could say congregants are experiencing a brief interlude of humility--and isn't it positive to periodically practice that virtue? Are they being slavish (bad) or are they briefly putting aside self, and focusing on the community? If you're entirely focused on the words of liturgy, you'll think worship is grovelling. But if you think about what people are actually experiencing, it may be something else.

Now I will "confess": I personally do not worship--I just can't do it, because I think there's nothing to worship. But I actually like being part of a worshiping group, at least from time to time. This is what always strikes me: the people in my particular reform Jewish congregation are movers and shakers. Every other day of the week, they have lots of individual power and wealth. Yet they come together and in some sense lower themselves, becoming just one member of a group. Standing to show respect, bowing, and various other acts, are ways of acting out this focus beyond the self. I find that just the opposite of repellent, and actually moving.

So--is worship incompatible with human dignity? It depends enormously on what worship is. I think a "reasonable atheist" will not jump to conclusions.

(1) If there is a god, then there's something we should worship.

(2) There's nothing we should worship.

(C) There is no god.

(1), they say, is true by definition. God (on the sort of theism they're examining) is all-knowing, all-good, and all-powerful and something humans are obligated to worship.

(2) is a moral claim which they defend by making worship out to be something tremendously self-abnegating, and so inconsistent with human dignity.

Now, if worship requires unquestioning and unqualified obedience, it seems that the only theologically acceptable free act we can perform is the act of submitting our lives to God. And once we submit to God, we must abdicate our capacity for independent moral judgment. Once again, if God deserves all-in trust, praise, love, and so on, then to question--or even to wonder about--any of His demands is to sin. Actions done independently of God's commands, even if they accord with what God command, are nevertheless failures of obedience. (p. 151)I think Jews and Christians (I don't know about Muslims) will simply reject this account of what worship amounts to. Reread the last sentence. It makes it look as if God orders us about all day long. There's no reason why theists should understand God this way. In fact, the idea that God made humans "in his image" doesn't comport with the idea that we are robots under his constant command. No, we are like him--we are self-governing. If he does issue some commands--"thou shalt not kill"--how does it compromise human autonomy for us to "have to" obey them? On any objective morality, there are things we "have to" do. On the other hand, if we must define "worship" in this extreme way, I'd just reject (1), if I were a theist. No, it's not a part of the concept of God that we are his slaves. Note: it's "God the father" not "God the master." So: the argument seems easily dismissed by theists.

And yet, and yet. I think Aikin and Talisse do raise an interesting question. Namely: what is this thing called "worship" and how could it be good, not bad? Even if worshiping God is not a question of constant servility, how could it be self-respecting to prostrate yourself even just by bowing your head at a religious service? Isn't even that a bit servile--so a bit bad?

Then again, is "worship" even a necessary term? Perhaps the state of mind in a church or synagogue can be reverence--a much less submissive thing than worship. One could say congregants are experiencing a brief interlude of humility--and isn't it positive to periodically practice that virtue? Are they being slavish (bad) or are they briefly putting aside self, and focusing on the community? If you're entirely focused on the words of liturgy, you'll think worship is grovelling. But if you think about what people are actually experiencing, it may be something else.

Now I will "confess": I personally do not worship--I just can't do it, because I think there's nothing to worship. But I actually like being part of a worshiping group, at least from time to time. This is what always strikes me: the people in my particular reform Jewish congregation are movers and shakers. Every other day of the week, they have lots of individual power and wealth. Yet they come together and in some sense lower themselves, becoming just one member of a group. Standing to show respect, bowing, and various other acts, are ways of acting out this focus beyond the self. I find that just the opposite of repellent, and actually moving.

So--is worship incompatible with human dignity? It depends enormously on what worship is. I think a "reasonable atheist" will not jump to conclusions.

6/3/11

Absent Pains

|

| Paris - with no Tube smell or Arkansas pigs or monsoons |

Jeez--thinking about absent pains of absent people is tricky, so it seems reasonable to think instead about the absent pains of actual people. After all, we are better at thinking about actual people.

So that's what I'm doing this afternoon. Fending off my children (now 14 + 14), because today is the first day of summer vacation, and thinking about absent pains. Ahem. Somebody's got to do it.

I asked my family the following question at the dinner table last night, and the aforementioned 14s literally ran away. When they were younger they were much better philosophical guinea pigs. Question: Suppose you go to Paris, and there are these good and bad things about your experience---

GOOD: taste of croissants, pleasure of seeing real Renoirs

BAD: ugh, all the foie gras on the menus, pain when gravel gets into shoes at Tuileries

On the other hand, various pains are absent. Like for example, that yucky electrical smell in the London Underground. So... should we count the absent pains as being among the good things?

That was my question, but then, as the kids fled, my husband and I came up with more absences. If you go to Paris, you also don't have to suffer the smell miseries of an Arksansas pig farm. And there probably won't be a monsoon that soaks all your clothes.

Are all these absent pains to be added to the above list of Paris "goods"?

Even without help from the 14s, we decided: no. In fact, obviously not. The BADS in London, or Arkansas, or Indonesia, are points in favor of going to Paris, but they aren't GOODS in Paris.

This seems really clear. But if that's so, then why (on earth) would we take the absent pains of absent people as good? It strikes me that it's all the same. This ultra-simple point is actually devastating to Benatar, so I'd like to be sure I'm not missing something.

6/2/11

Scorning into Silence

I've semi-retired from online-atheism watching (it got too repetitive--no, I wasn't scorned into silence), but I'm glad someone else is on the job. You'll have to wade through the comments here before you can appreciate Jeremy's response.

Subscribe to:

Posts (Atom)